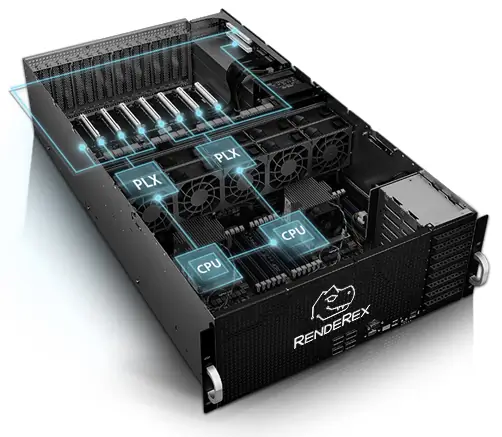

Meet the RAPTOR-8.

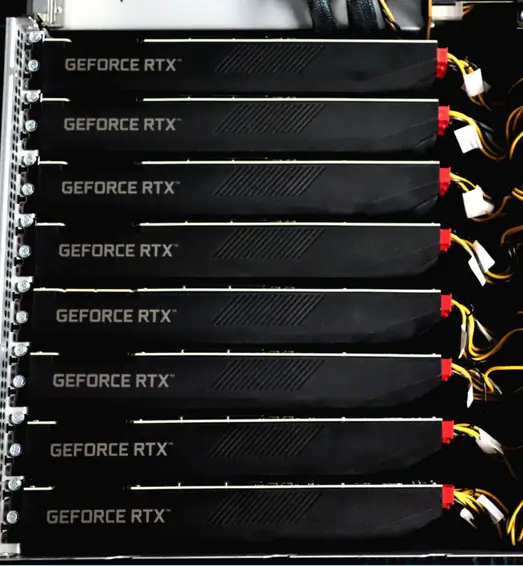

Built to fulfill the most demanding GPU compute requirements, the RAPTOR-8 can be configured with up to eight GPU's for the most demanding GPU compute requirements.

Training & Inference servers

Training & inference servers for AI from RendeRex come in a variety of flavors and configurations.

| Form Factor | # of GPU's |

|---|---|

| 4U | 8 |

| 2U | 8 |

| 2U | 4 |

GPU's

| GPU | Class |

|---|---|

| NVIDIA RTX 4090 | Consumer |

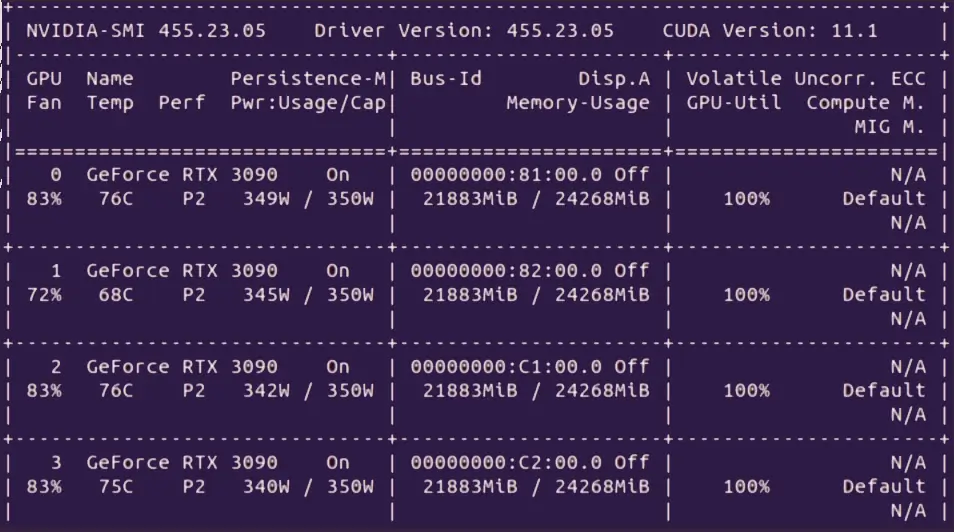

| NVIDIA RTX 3090 | Consumer |

| NVIDIA RTX 6000 ADA | Enterprise |

| NVIDIA H100 80GB PCIe | Enterprise |

| NVIDIA A100 80GB PCIe | Enterprise |

| NVIDIA H100 80GB SXM5 | Enterprise+ |

| NVIDIA A100 80GB SXM4 | Enterprise+ |

*For the NVIDIA H100 80GB SXM5 and NVIDIA A100 80GB SXM4 variant, the SKU is limited in form-factor to a 5U chassis.

Consumer-grade GPU's

Consumer GPU's can be useful for smaller research teams with a limited budget, or in early stages of development. These draw significantly more power than their enterprise counter-parts, but provide enough performance that they make sense financially as a one-off, or in a small cluster. It should be noted however, that there are some functions in Deep Learning applications that are unsupported on consumer-grade NVIDIA cards, and these should be researched independently.

Enterprise-grade GPU's

NVIDIA's enterprise selection of cards for AI is vast, and almost all cards will be supported on our platforms, and can be upgraded / swapped out in the future. The complete list of cards supported in our servers extends beyonds the cards mentioned here (as RendeRex is an approved NVIDIA NPN Partner), however we have included these cards as a reference of the (currently) most popular GPU's for AI training.

Leave the Linux system-administration to us.

Need PCIe passthrough? GPU-enabled Kubernetes? Docker with GPU access? Rather than have your data-science team spend countless, invaluable hours trying to perform Linux system administration to figure out obscure tasks, our team of experienced DevOps/MLOps engineers will set everything up for you, exactly the way you want it, so that your team can focus exclusively on what they do best.

Environment / OS

Depending on how much involvement you'd like from RendeRex, we generally provide three different types of configurations for servers:

| Configuration | Description |

|---|---|

| Ubuntu 18.04/20.04 LTS | Your preferred version of Ubuntu, running bare-metal, pre-configured for AI (pre-installed with NVIDIA drivers, CUDA framework, Docker, etc.) |

| Virtualization Environment | A series of pre-configured Ubuntu-based VM templates (can be configured as Cloud-Init) with GPU pass-through, running in an OSS virtualization environment using QEMU/KVM. Configured so that templates spin up to VM's within minutes with NVIDIA drivers, Docker, etc. |

| Kubernetes | A Kubernetes environment with Docker, optionally pre-installed with KubeFlow, Jupyter Notebook, etc. (nodes built as virtual machines) |

Configurable with up to 8x GPU's.

Up to eight NVIDIA A100's, A30's, A10's and more , we'll design and deploy your server with up to eight enterprise-grade GPU's, single or dual root complex, and enough RAM to avoid bottlenecks in intensive workloads (RendeRex as well as NVIDIA recommend 1.5 times the total VRAM for deep-learning applications). Whatever your requirements are, we'll make sure you get the utmost performance out of your GPU server while staying within your budget.

Networking

| Type | Bandwidth |

|---|---|

| Ethernet | 1Gbps |

| Ethernet | 10Gbps |

| Ethernet | 100Gbps |

| InfiniBand | 100Gbps |

| InfiniBand | 200Gbps |

*HGX A100 servers come with 8x CX-6 200Gbps InfiniBand NIC's (one per-card)

Scale to a Cluster

Scale your RendeRex GPU-Compute nodes to a high-availability Kubernetes cluster. This is especially useful for clients who are starting out in the R&D phase and buy compute nodes on an as-you-go basis, and would like to scale out their compute nodes into a cluster.

Scalable Storage

RendeRex provides several choices in terms of storage. For single nodes, this can be on-board enterprise storage (U.2 NVMe, M.2 NVMe, SATA SSD, etc.), or alternatively as you begin to scale your compute nodes, a dedicated storage node which can then be scaled into a storage cluster for distributed, high-speed storage and later into a tiered-storage solution with an HDD archive.

As you scale your compute solution with RendeRex, we'll convert the local, on-board storage into an NFS cache for you, so that nothing goes to waste.

Get In Touch

If you're interested in one of our solutions or products, or you have a question for us, please get in touch at [email protected], and one of our representatives will contact you shortly. Alternatively, use the form below for a direct quote on one of our products: